Modeling Formula One: Getting to the Forecast

The first step is the hardest. But not as hard as you'd think.

In the first entry of this series, we worked through some basic ideas in forecasting. We also examined the kinds of data we want to use for predicting the outcome of Grands Prix: drivers’ starting positions, lap time averages, and average position throughout the race.1

However, there’s an enormous gap between having identified those data points and being able to say Lewis Hamilton has a 7.86% chance of prevailing at Zandvoort.2

This entry takes the first steps in bridging the gap. And the ideas handled in the first article are the stepping stones needed to accomplish that goal.

Where We Left Off

In that first article, we established a couple of crucial points. First, a small set of data points can contain a large amount of encoded information. Second, methods exist to discern the relationship between that encoded stuff and some outcome we’re trying to predict.

And, critically, we examined why it’s important to shoot for forecasting a distribution of outcomes, not just a single guess. That constraint informed the choice to predict a GP’s result using data from that very same GP.

A reasonable reader might ask—how on earth does that make any sense? By the time the public can access the data from a Grand Prix (lap time averages, average position, etc.), it’ll be too late to do anything meaningful with a prediction! Books close, DFS contests lock, and friends become distinctly less impressed with clairvoyance.

It makes sense for this reason: we’re going to simulate the data about the race, and then use that set of simulations to generate our distribution of GP outcomes.

A Reasonable Guess

Simulating a driver’s lap averages and average position is, surprisingly, not a terribly demanding task. Both data points are continuous numeric variables—fancy phrasing for decimal numbers—and the human race has a long history of forecasting those.3

For decimal number data, there’s a handy tool that (usually) saves us a lot of time and trouble. It’s called the “Normal Distribution”. And as you may have guessed—it’s a kind of distribution, like the ones touched on in the first article.

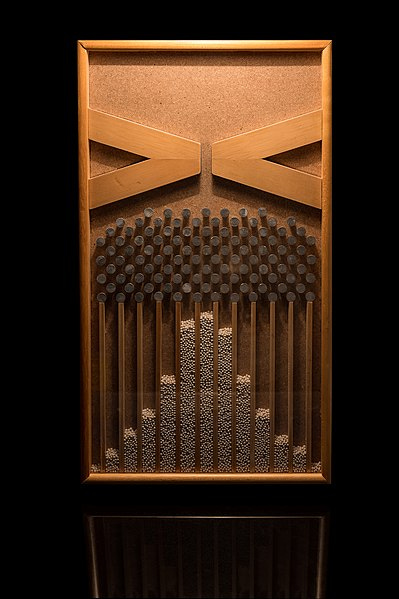

The Galton Board

As a refresher, a distribution is just a way to think about the shape of a set of outcomes. I’ve always loved the imagery of a Galton Board, seen below.

When the board is in use, a collection of metal balls cascade down from the top segment, passing through a thicket of pegs. The balls bounce randomly off the pegs—left or right, left or right, left or right. Finally, each ball passes into one of the slots seen at the bottom of the board.

Notice where the balls tend to end up. Most cluster into the middle. A few slipped further to the left or right. Only a small number made it to the leftmost or rightmost slot.

That shape created by the balls is the shape of the “Normal Distribution”, and it appears everywhere in nature. I’ll avoid the math behind why. For now, it’s just important to realize that anytime you’re dealing with the distribution of numeric data, there is a very high chance that the distribution will take on the shape you see above.

For most numeric data, the “center” of the distribution is the average. Let’s say, for example, that Max Verstappen’s average lap time is 91 seconds.4 It’s fair to assume that in most of Max’s events, he’ll post an average lap time around 91 seconds. Just like metals balls clustering into the center slots. In a few races, Max’s average time will be much higher. Those results are like the balls that slide all the way to right. In a small number, the result is really below average. Those results are the balls all the way on the left. And occasionally, he’ll only be somewhat above or below that 91 mark. Those results are the balls near the middle.

As metal balls bounce off pegs and into slots, so too is Max’s race subject to random influences. Some benefit him. Some don’t. We expect it to usually, although not always, wash out close to his average—just as most of the bouncing balls will eventually fall into the center.

So, when faced with the task of predicting Max’s lap times in a race, all we have to do is mathematically draw up that “Normal Distribution” shape, centered on the average of his historical performances. There’s more math that goes into figuring out how stretched out the sides of that bell curve are, but let’s skip over it for now. Once we have the shape centered on Max’s historical average, we can pull random “results” from within the shape. The taller the shape is at a certain point, the more likely that number is to be our forecasted result.

In the language of the Galton board—to simulate a result, we pull a ball at random from the shape. Naturally, we’re more likely to get a ball from the center slots (lap times closer to average) than a ball from the peripheral slots (lap times that are extraordinarily bad or extraordinarily good). In that way, our simulation (or group of simulations) reflects a driver’s likely performance while allowing for variation.

Phew. That’s one data point (average lap time) for one driver (Verstappen) settled. To get the data we need to forecast the Grand Prix, we need to roll out the process described above for both of our unknown data points—average lap times and average position—for each of the 20 drivers.5 Luckily, there’s plenty of software tools that allow that job to be knocked out in a couple of minutes.

Picking a window

An important piece of this whole discussion is “historical” performances. Those performances determine where on the number line we situate our normal distribution shape. But what does “historical” mean? Each of a driver’s race results? Absolutely not. Max today is more comparable to Max last year than Max at the start of his career. Even taking the entirety of the current season is fraught. Consider the difference in McLaren’s performance in March versus its ascendancy in June and July.

Picking the window of performances to consider is tough. Because we’re striving for simplicity, I calculated the correlation scores between a driver’s performance in a race, and their average performance X number of races back.6 In this case, a correlation score can be thought of as a “similarity” score. Here’s that analysis for average lap time:

Things go downhill fast. With each additional race considered, the similarity decreases. Average position is in the same boat.

I set the number of races I’m willing to consider “historical” at three. While using two is a bit more accurate, I also don’t want the simulations to overreact to someone’s only good day of the season.

Cramming Simulations Into a Box

With simulations of lap times and average position in hand, we’re ready to produce a proper forecast!

In the first article, we examined the ability of computers to learn relationships between data points and some result we’re trying to forecast. Suffice it to say that an ocean of methods exist for discerning what links our chosen variables and a driver’s finishing place.7 I picked one, cut it loose, and now have a handy model that takes our three data points in and spits out a predicted result.

So to get a forecast for a Grand Prix, we just have to feed that model a set of simulated race data and ask “given this information, which drivers finished where?” Take the predicted finishing spots, assign them a rank order—that’s your forecast.

That’s literally all. Once you do the heavy lifting of thinking through how to simulate an individual race, all that’s left is to cram the simulation(s) into your model and let it do its thing.

And as you may have figured out, if you create 10,000 simulations for each of the 20 drivers … you can simulate the Grand Prix 10,000 times. That gives you a distribution of 10,000 outcomes for each driver. As an added bonus—counting up the 786 simulations in which Lewis Hamilton wins allows you to claim the Brit has a 7.86% chance of prevailing at Zandvoort.

Looking Ahead

With 10,000 race simulations in hand, we’re able to perform detailed analysis of upcoming events. By considering all of a driver’s forecasted outcomes, you can determine what proportion of the time they’re worth their DFS salary. You can detect which drivers are “safer” roster choices, and which have the most upside.

More on that to come. For now, enjoy what’s left of the summer break. Next week, I’ll walk through how I use this model to build DFS lineups!

Yes, “Grands Prix” is the plural of Grand Prix. It surprised me too.

That’s just a number based on gut feeling—not on any formal analysis. As a side-note, if you do this sort of thing long enough, your “gut feelings” manifest as fractional percentages.

Just ask your friend who trades crypto. Additionally, some readers may object to the substitution of “continuous variable” for decimal number. Technically, yes, they’re not equivalent. For these purposes, however, they are.

No sensible model would use lap time in raw seconds. Time varies wildly across tracks, and a strong performance in Monaco would be wiped out by a mediocre performance at Spa. In the model-building process, I use normalized lap times, the engineering of which I’ll cover some time in the future. For now, I’m using regular old seconds just to keep the illustration simple.

The third data point used in this series, starting position, is known before lights out and no simulating is necessary. Unless you really, really want to get an edge before qualifying.

The data was from the last couple of seasons.

If machine learning is your thing, know I used XGBoost like an absolute normie.